Hongliang Lu(卢红亮)

Welcome to my personal homepage! I am a third-year M.E. student in Mechanical Engineering at the College of Engineering, Peking University, advised by Prof. Zaiwen Wen. I received my B.E. degree in Robotics Engineering from Peking University in 2023.

My research centers on the synergy between Reinforcement Learning and Large Language Models, focusing on three key directions:

-

RL for LLMs: Developing data-efficient reinforcement learning algorithms to enhance post-training effectiveness, aiming to improve model performance and alignment with human preferences;

-

Agentic RL: Designing novel RL methods to advance autonomous agent capabilities, with a particular emphasis on self-evolving mechanisms that push the boundaries of agent performance through continuous self-improvement and autonomous capability scaling;

-

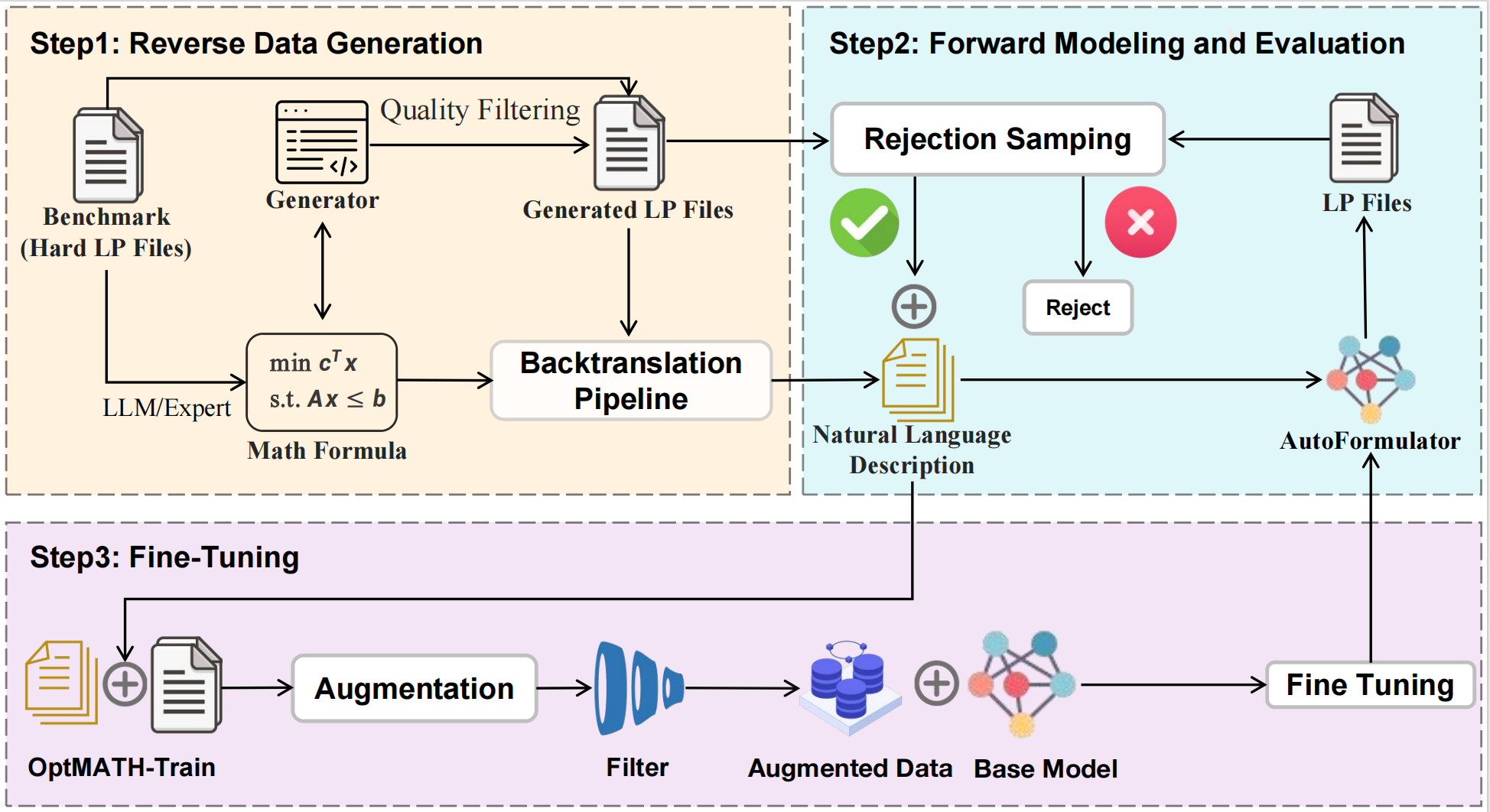

LLMs for Optimization: Leveraging the reasoning capabilities of large language models to tackle complex optimization modeling and decision-making problems.

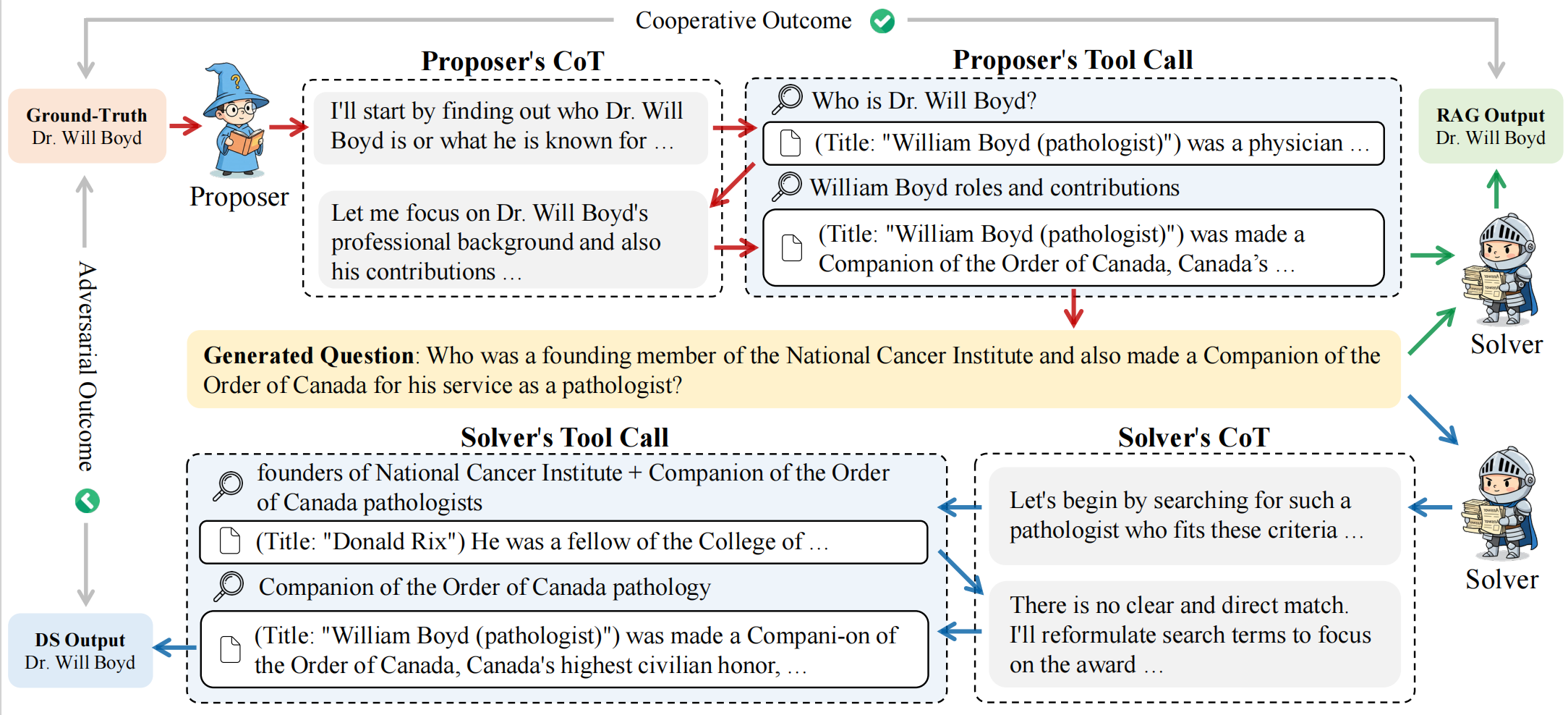

I have interned at two leading AI companies. At Alibaba’s QuarkLLM team(2025.05-2025.09), I contributed to the Deep Search project, designing RL algorithms to strengthen the Deep Search agent’s performance on tasks requiring multi-step reasoning and complex retrieval . Previously, at Moonshot AI (202501-2025.05), I worked on data synthesis and RL training for their WebAgent.

news

| Jan 28, 2026 | Our paper “Search Self-Play: Pushing the Frontier of Agent Capability without Supervision” has been accepted to ICLR 2026! 🎉 |

|---|---|

| Oct 22, 2025 | We are excited to release our latest research work in Agentic RL: “Search Self-Play: Pushing the Frontier of Agent Capability without Supervision”! 🚀 The paper has been submitted to ICLR 2026 and explores novel self-play training methods for enhancing agent capabilities without supervision. |

| May 01, 2025 | Our paper “OptMATH: A Scalable Bidirectional Data Synthesis Framework for Optimization Modeling” has been accepted as a poster presentation at ICML 2025! 🎉 |

selected publications

latest posts

| Nov 08, 2024 | Scaling Law |

|---|---|

| Jul 26, 2024 | Transformer Architecture Explained: Attention is All You Need |

| Jul 26, 2024 | Understanding Attention Mechanism: Self-Attention and Attention Models |